- Daily Success Snacks

- Posts

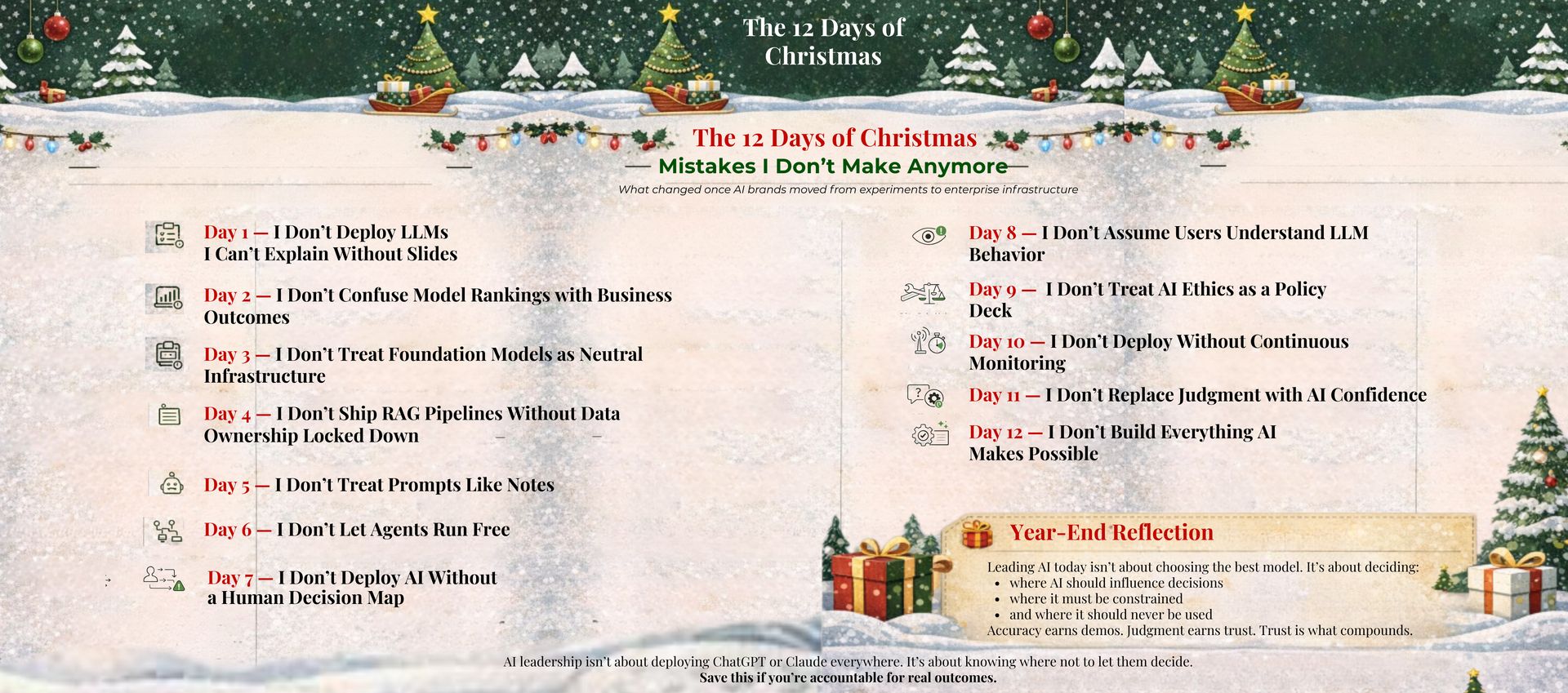

- The 12 AI and LLM Mistakes Enterprise Leaders Avoid to Maintain Trust

The 12 AI and LLM Mistakes Enterprise Leaders Avoid to Maintain Trust

A year-end summary from leaders who have experienced the challenges of scaling LLMs too quickly.

Read time: 2.5 minutes

Effective AI leadership is not about selecting the most advanced model; it is about understanding potential failures as AI becomes core infrastructure.

The first phase of AI adoption is exciting because everything works well enough. Prototypes impress, benchmarks sparkle, and early users forgive odd behavior because it feels new. But once AI shifts from experimentation to enterprise infrastructure, tolerance fades. Legal teams ask harder questions, frontline teams rely on outputs to make decisions, and small failures compound into reputational, operational, and compliance risks.

This is where leadership shows up... not in model demos, but in constraints, clarity, and judgment. Experienced AI leaders don’t scale faster by adding capability; they scale safely by removing ambiguity. They stop shipping systems they can’t explain, stop trusting rankings over outcomes, and stop pretending foundation models behave like neutral utilities instead of opinionated systems with trade-offs baked in.

The 12 Common AI and LLM Mistakes You Should No Longer Make as a Leader:

Key changes as AI moved from experimentation to enterprise infrastructure

Day 1 — Do Not Deploy LLMs That Cannot Be Clearly Explained

If OpenAI, Claude, or Gemini cannot be clearly explained to legal, security, and frontline teams, the model is not deployed.

Day 2 — Do Not Confuse Model Rankings with Business Outcomes

Benchmarks do not determine business success.

Selecting among ChatGPT, Claude, Gemini, or Llama is a systems decision, not a competition based on rankings.

Day 3 — Do Not Treat Foundation Models as Neutral Infrastructure

Each model reflects its training data, reinforcement strategies, and alignment trade-offs.

Assuming neutrality is not optimism; it is a lack of responsibility.

Day 4 — Do Not Deploy RAG Pipelines Without Secured Data Ownership

RAG pipelines with unstructured data do not reduce hallucinations.

They formalize and amplify these issues.

Day 5 — Do Not Treat Prompts as Informal Notes

Prompts should be treated as code.

If prompts are not versioned, reviewed, and tested, instability will occur.

Day 6 — Do Not Allow Agents to Operate Without Oversight

Autonomous agents must have defined permissions and logged actions to prevent operational failures.

Day 7 — Do Not Deploy AI Without a Human Decision Map

Human-in-the-loop is not merely a legal requirement.

It’s a product decision.

Day 8 — Do Not Assume Users Understand LLM Behavior

If misuse is possible, it should be anticipated.

This is a leadership responsibility, not a user issue.

Day 9 — Do Not Treat AI Ethics as a Policy Deck

If ethics are not integrated into user experience, refusal mechanisms, and escalation processes, they are ineffective.

Day 10 — Do Not Deploy Without Continuous Monitoring

Production AI systems require ongoing oversight.

Issues such as drift, injection, latency, and cost will inevitably arise.

Day 11 — Do Not Replace Judgment with AI Confidence

LLMs may present information with confidence, even when incorrect.

Confidence without proper calibration poses significant risks at scale.

Day 12 — Do Not Build Everything AI Makes Possible

Having the capability does not imply permission.

Exercising restraint yields more sustainable results than unchecked ambition.

💡Key Takeaway:

Leading AI today isn’t about choosing the best model; it’s about deciding where AI should influence decisions, where it must be constrained, and where it should never be used. Accuracy earns demos, benchmarks win slides, but judgment earns trust, and trust is the only thing that compounds when AI becomes infrastructure.

👉 LIKE if AI leadership is more about judgment than intelligence.

👉 SUBSCRIBE now for enterprise AI lessons learned the hard way—so you don’t have to.

👉 Follow Glenda Carnate for practical insights on LLMs, risk, and decision design.

Instagram: @glendacarnate

LinkedIn: Glenda Carnate on LinkedIn

X (Twitter): @glendacarnate

👉 COMMENT with the AI mistake you see teams repeating most often.

👉 SHARE with a leader scaling AI beyond pilots and proofs.

Reply